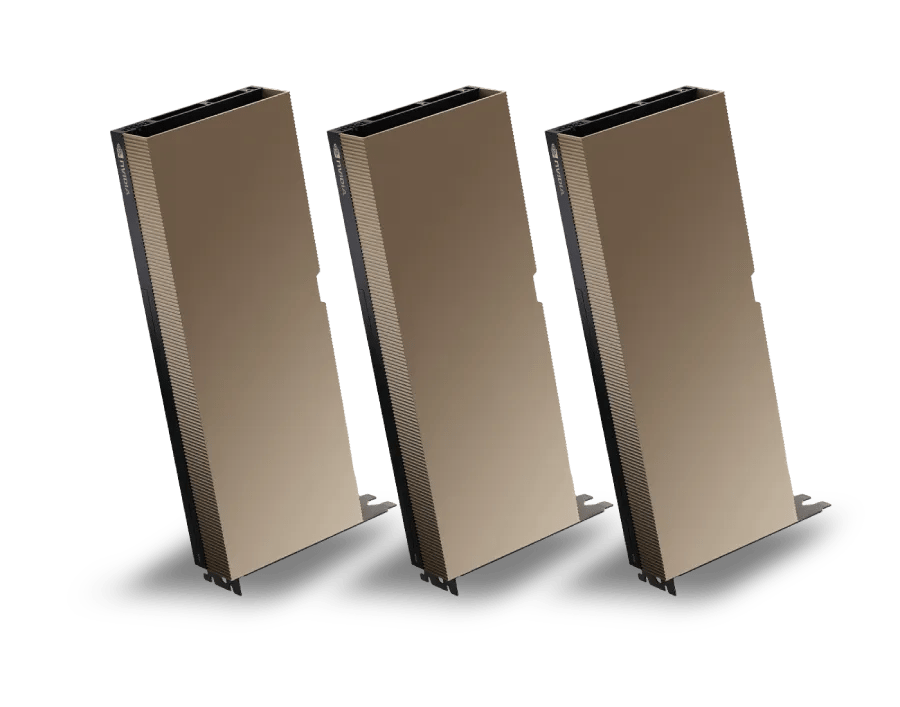

NVIDIA H100 PCIe

Power Cutting-Edge AI with NVIDIA H100 PCIe

Designed to power the world's most advanced workloads, the NVIDIA H100 PCIe excels in AI, data analytics, and HPC applications, and offers unmatched computational speed and data throughput. Available directly through Skyline.

Unrivalled Performance in...

AI Capabilities

30x faster inference speed and 9x faster model training than the NVIDIA A100.

Maths Precision

Supports a wide range of maths precisions, including FP64, FP32, FP16, and INT8.

HPC Performance

Up to 7x higher performance for HPC applications.

Energy Efficiency

26x more energy efficient than CPUs.

Key Features

PCIe Form Factor

Designed with a standard NVIDIA H100 PCIe form factor, ensuring compatibility with a wide range of systems.

High Performance Architecture

Optimised for diverse workloads, The NVIDIA H100 PCIe is especially adept at handling AI, machine learning, and complex computational tasks.

Scalable Design

The modular nature of the NVIDIA H100 PCIe allows for easy scalability and integration into existing systems.

Enhanced Connectivity

The NVIDIA H100 PCIe handles swift data transfer with ease, essential for tasks involving large data sets and complex computational models.

Advanced Storage Capabilities

Local NVMe in GPU nodes and parallel file system for rapid data access and distributed training.

Better Memory Bandwidth

Offers the highest PCIe card memory bandwidth exceeding 2000 GBps, ideal for handling the largest models and most massive datasets.

Maximum TDP of 350 W

Operates unconstrained up to a thermal design power level of 350 W, ensuring that the card can handle the most demanding computational speeds without thermal constraints.

Multi-Instance GPU Capability

The NVIDIA H100 PCIe features Multi-Instance GPU (MIG) capability, allowing it to partition into up to 7 isolated instances. This flexibility is crucial for efficiently managing varying workload demands in dynamic computing environments.

NVIDIA NVLink

Three built-in NVLink bridges deliver 600 GB/s bidirectional bandwidth, providing robust and reliable data transfer capabilities essential for high-performance computing tasks.

Technical Specifications

GPU: NVIDIA H100 PCIe

Base Clock: 1.065 GHz

Memory: 80 GB HBM3

Frequently Asked Questions

We build our services around you. Our product support and product development go hand in hand to deliver you the best solutions available.

The NVIDIA H100 has a peak FP32 performance of 80 TFLOPs, much higher than the NVIDIA A100's 40 TFLOPs.

The NVIDIA H100 offers higher raw performance. Consider factors like Memory bandwidth as NVIDIA A100 has higher HBM2E bandwidth, potentially impacting certain tasks. Power consumption is another factor as the NVIDIA H100 uses more power (700W vs. 500W for A100).

The NVIDIA H100 has a GPU memory of 80 GB.

The NVIDIA H100 PCIe has a TDP of up to 300-350W.

You can rent an NVIDIA H100 GPU on Skyline from $ 2.12 per hour.