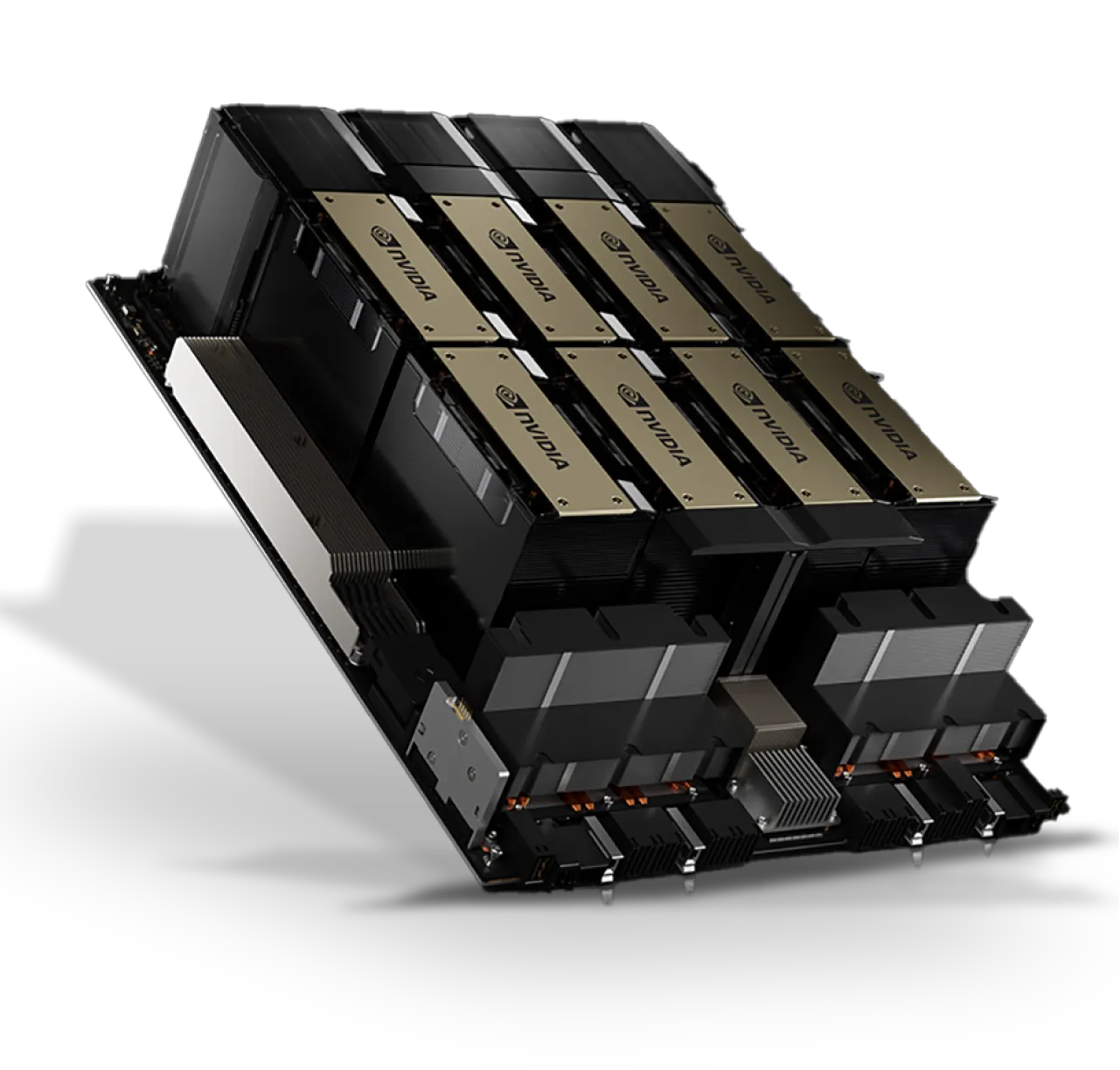

NVIDIA H100 SXM

A Supercloud Specialised for AI

Introducing the most advanced AI cluster of its kind: Skyline's NVIDIA H100 SXM is built on custom DGX reference architecture. Deploy from 8 to 16,384 cards in a single cluster - only available through Skyline's Supercloud reservation.

The Largest Single

Clusters of NVIDIA H100s

We ensure a surplus of power to run even the most demanding workloads. With up to 16,384 H100 80G cards operating in a single cluster, there are no break points, allowing for multitenant capabilities.

Built with NVIDIA

DGX Protocols

Built on NVIDIA DGX reference architecture, the NVIDIA H100 SXM model integrates seamlessly into the DGX ecosystem, providing a comprehensive solution for enterprise-level AI development and applications.

Unmatched Network Connectivity

While most platforms boast "fast" connectivity, typically ranging from 200gibps to 800gibps, Skyline's Supercloud operates at a staggering 3.2tibps (3,200gibps), offering a significant performance enhancement over traditional platforms.

Unrivalled Performance in…

AI Training & Inference

30x faster inference speed and 9x faster training speed.*

LLM Performance

30x faster processing*, enhancing language model performance.

Single Cluster Scalability

The AI Supercloud environment is the largest single cluster of H100s available outside of Public Cloud.

Connectivity

Specifically designed to allow all nodes to utilise fully their CPU, GPU and Network capabilities without limits.

Customised & Scalable Service Delivery

Bespoke Solutions for Diverse Needs

Every business is unique, and at Skyline, we tailor our service delivery to match your specific requirements. We personally onboard you to the Supercloud, understanding your unique challenges and objectives, ensuring a solution that aligns perfectly with your business goals.

Scalability at Your Fingertips

Flexibility and scalability are the cornerstones of our service delivery model. Built in clusters of 16,384 cards, you will not find a service outside of public cloud that can deliver the same scale and performance that we offer for AI workloads.

SuperCloud Storage

The WEKA® Data Platform delivers a comprehensive and unified data management solution optimized for Supercloud environments. This platform is engineered to support dynamic data pipelines, offering high-performance data storage and processing capabilities that address every stage of the data lifecycle, from ingestion and cleansing to modeling, training validation, and inference.

Scalable Performance

The WEKA Data Platform is engineered to provide exceptional I/O, minimal latency, and robust support for small files and diverse workloads, which is essential for the performance-intensive requirements of AI applications.

Organizations can independently and linearly scale their compute and storage resources in the cloud, efficiently managing billions of files of various types and sizes. Fully certified for NVIDIA DGX SuperPOD.

Simplified Data Management

The platform features multi-tenant, multi-workload, multi-performant, and multi-location capabilities with a unified management interface, streamlining data management for complex AI pipelines across various environments.

Secure & Compliant

WEKA implements robust encryption for data in-flight and at-rest, ensuring comprehensive compliance and governance for sensitive AI data assets.

Energy Efficient

The platform reduces energy consumption and carbon footprint by minimizing data pipeline idle time, extending hardware lifespan, and facilitating cloud workload migration. The WEKA Data Platform can be utilized within Supercloud environments or as a complementary option for standard cloud offerings through Skyline, providing shared NAS and Object storage with comprehensive snapshot and cloning capabilities.

Dedicated Onboarding & Support Team

Our Dedicated Onboarding & Support Team is your reliable partner, providing expert guidance and support to ensure a smooth onboarding experience and ongoing assistance, empowering you to achieve your goals with confidence.

24/7 Expert Assistance

Our commitment to excellence extends to our customer support. We offer 24/7 assistance, ensuring that expert help is always available whenever you need it. Our team is equipped to handle any query or challenge you might encounter with the HGX SXM5 H100 and your Supercloud environment.

Personalised Support & Onboarding

At Skyline, we believe in providing a customer support experience that goes beyond the conventional. We offer personalised, comprehensive solutions, dedicated to onboarding the perfect environment specialised to your needs.

Technical Expertise and Deep Product Knowledge

Our support team possesses deep technical expertise and extensive product knowledge. This ensures that any support you receive for the NVIDIA H100 SXM and Supercloud onboarding is not just general guidance but informed, specialised assistance tailored to the nuances of this advanced technology.

Benefits of NVIDIA H100 SXM

SXM Form Factor

Optimized for maximum performance with high-density GPU configurations, advanced cooling systems, and superior energy efficiency, delivering exceptional computational power in a compact form factor.

DGX Reference Architecture

Engineered to NVIDIA's exacting DGX specifications, ensuring optimal performance for enterprise-scale AI and machine learning workloads with uncompromising reliability and throughput.

Scalable Design

Future-proof your infrastructure with a modular architecture that adapts to evolving computational demands, supporting seamless expansion within unified clusters of up to 5,120 H100 GPUs.

TDP of 700 W

Harness substantially higher thermal design power compared to PCIe variants, enabling peak computational performance for the most demanding AI and HPC applications that require sustained processing power.

NVLink & NVSwitch

Experience revolutionary interconnect performance with advanced NVLink and NVSwitch technologies, delivering dramatically higher bandwidth than standard PCIe connections for seamless multi-GPU workloads.

GPU Direct Technology

Accelerate data workflows with optimized memory access that enables direct read/write operations to GPU memory, eliminating redundant copies, reducing CPU overhead, and minimizing latency for time-sensitive applications.

Technical Specifications

GPU: NVIDIA H100 SXM

Memory: 80GB

TDP: 700W

Frequently Asked Questions

We build our services around you. Our product support and product development go hand in hand to deliver you the best solutions available.

Both NVIDIA's H100 SXM and PCIe models are powerful GPUs, but they differ in connectivity and performance. PCIe is flexible and comparatively lower at cost, but SXM has double the memory, faster data transfer and a higher power limit for extreme performance.

NVIDIA's H100 SXM is a strong GPU designed for data centres, optimised for demanding workloads like AI, scientific simulations and big data analytics.

The NVIDIA H100 offers 30x faster inference speed and 9x faster training speed than the A100.

The key features of NVIDIA H100 SXM include SXM Form Factor with high-density GPU configurations, efficient cooling, and energy optimisation; DGX Reference Architecture to meet enterprise-level AI demands; Scalable Design with modular architecture for seamless scalability; GPUDirect Technology for enhanced data movement; NVLink & NVSwitch for higher interconnect bandwidth; and TDP of 700W for intensive AI and HPC applications.

The NVIDIA H100 SXM power is up to 700W.

The NVIDIA H100 SXM h100 rental price starts at $2.40 per hour on Skyline. For long-term use, you can reserve the NVIDIA H100 SXM starting from $1.90/hour.