Power LLM with Skyline

and NVIDIA GPUs

Powered by 100% renewable energy

Benefits of Cloud GPU for LLM

Large language models are transforming the way we interact with language across diverse fields like machine translation, chatbot development and creative content generation. Rent GPU for LLM to train massive language models faster.

Unmatched Performance

Accelerate your LLM training and inference with the raw power of NVIDIA GPUs Tensor Core architectures.

Pre-Configured Environments

Get started quickly with pre-configured cloud LLM environments equipped with optimised software stacks and libraries, reducing setup time and complexity.

Scalability On-Demand

Scale your LLM resources up or down on demand to match your project's needs. Avoid the upfront costs and maintenance burden of managing your hardware infrastructure.

Cost-Effective

Choose from our pay-for-what-you-use pricing model to perfectly match your usage patterns and optimize your cloud GPU costs. Best for experimental projects or those with fluctuating resource demands.

Effortless Collaboration

Facilitate seamless collaboration on LLM projects with team members anywhere in the world. Share resources securely and work together efficiently to accelerate progress and innovation.

LLM Solutions

Personalised Learning

For educational applications, Skyline's LLM solutions enable dynamic content adaptation based on individual learning patterns. Our GPU-accelerated infrastructure supports real-time personalization that increases engagement by 40% and knowledge retention by 35% compared to traditional methods.

Legal Research & Automation

For legal professionals, our LLM infrastructure processes thousands of documents simultaneously, extracting key information with 94% accuracy. Skyline's GPU acceleration enables the analysis of complex legal texts in minutes rather than hours, dramatically improving workflow efficiency.

Creative Content Generation

For content creators and marketing teams, Skyline's LLM solutions generate high-quality, diverse creative outputs across multiple formats. Our specialized GPU configurations deliver 5x faster generation times while maintaining contextual relevance and brand consistency throughout the creative process.

Cybersecurity & Threat Detection

For security operations, our LLM-powered threat detection analyzes network patterns and identifies anomalies with unprecedented accuracy. Skyline's GPU infrastructure processes security logs 8x faster than CPU-based solutions, enabling real-time threat mitigation and reducing false positives by 76%.

Assistive Technologies

For accessibility solutions, Skyline's LLM infrastructure powers real-time translation across 95+ languages and converts speech to text with 98% accuracy. Our specialized GPU configurations enable sub-second response times for assistive applications, breaking down communication barriers and creating more inclusive digital experiences.

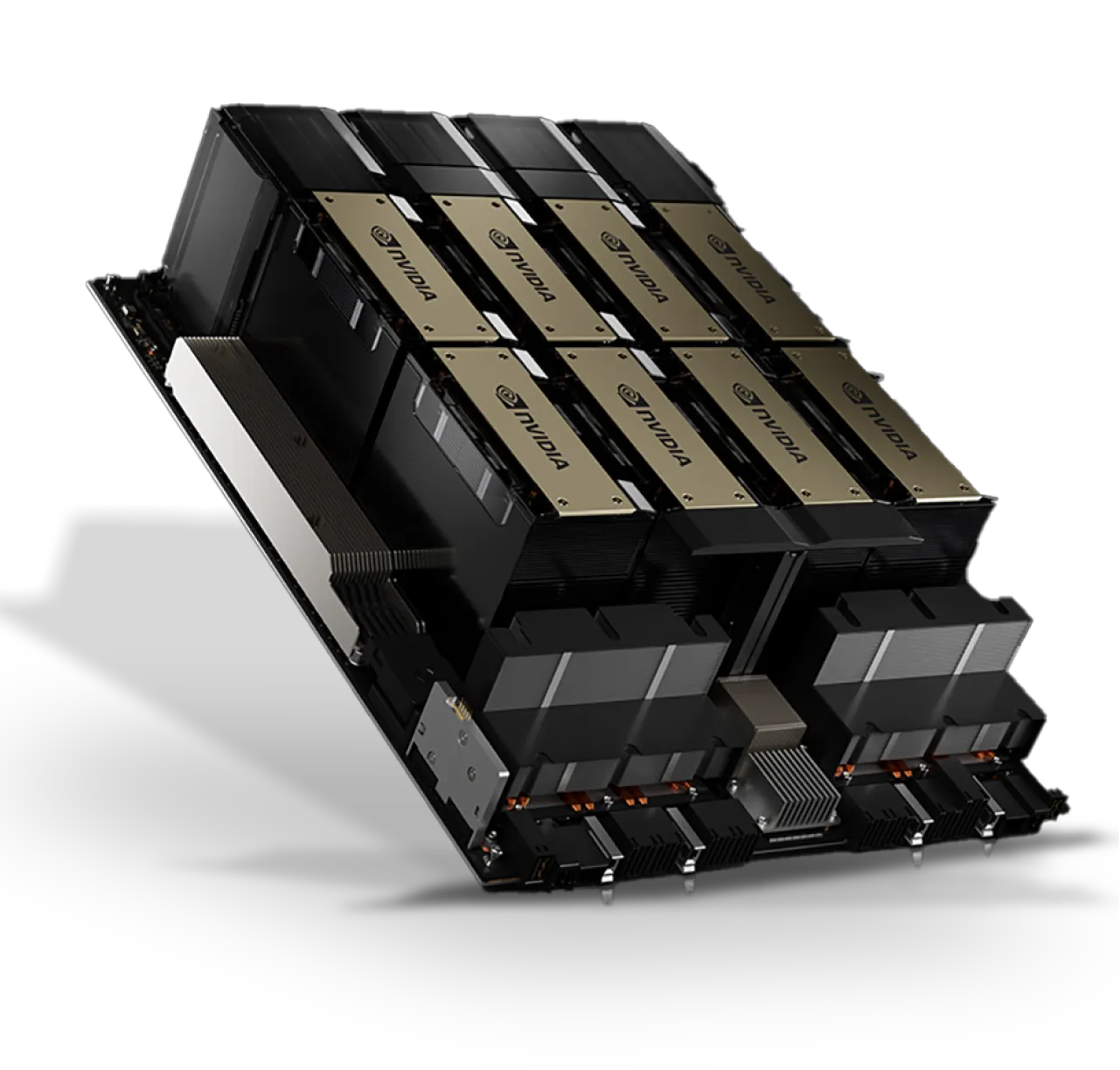

GPUs We Recommend for LLM

Achieve breakthrough results in your LLM projects with NVIDIA's cutting-edge LLM GPU technology. Rent GPU for LLM on Skyline.

Frequently Asked Questions

We build our services around you. Our product support and product development go hand in hand to deliver you the best solutions available.

Yes, you can utilise multiple cloud GPUs for LLM tasks to significantly accelerate training or inference for extensive workloads.

Using a cloud GPU for LLM provides access to high-performance computing resources without the need for expensive hardware investments. This makes it easier for you to handle the significant computational requirements of LLMs. Cloud GPUs also offer scalability, allowing you to adjust computational resources as needed. This flexibility is important for managing varying workloads and complex model training.

Skyline Cloud offers a range of GPU-powered instances ideal for training and deploying large language models. We provide powerful cloud GPUs like the NVIDIA A100 and H100 SXM with high computing performance needed for natural language processing models like BERT and GPT-3.

Choosing the right GPU for your LLM depends on several factors: - **Memory:** Opt for GPUs with ample VRAM to accommodate large models and datasets. - **Bandwidth:** High memory bandwidth is essential for faster data transfer between the GPU and memory. - **Scalability:** Ensure the GPU can scale in multi-GPU setups for distributed training.